eBPF Cilium combat (1) - team-based network isolation

Using cilium as a Kubernetes network service

-

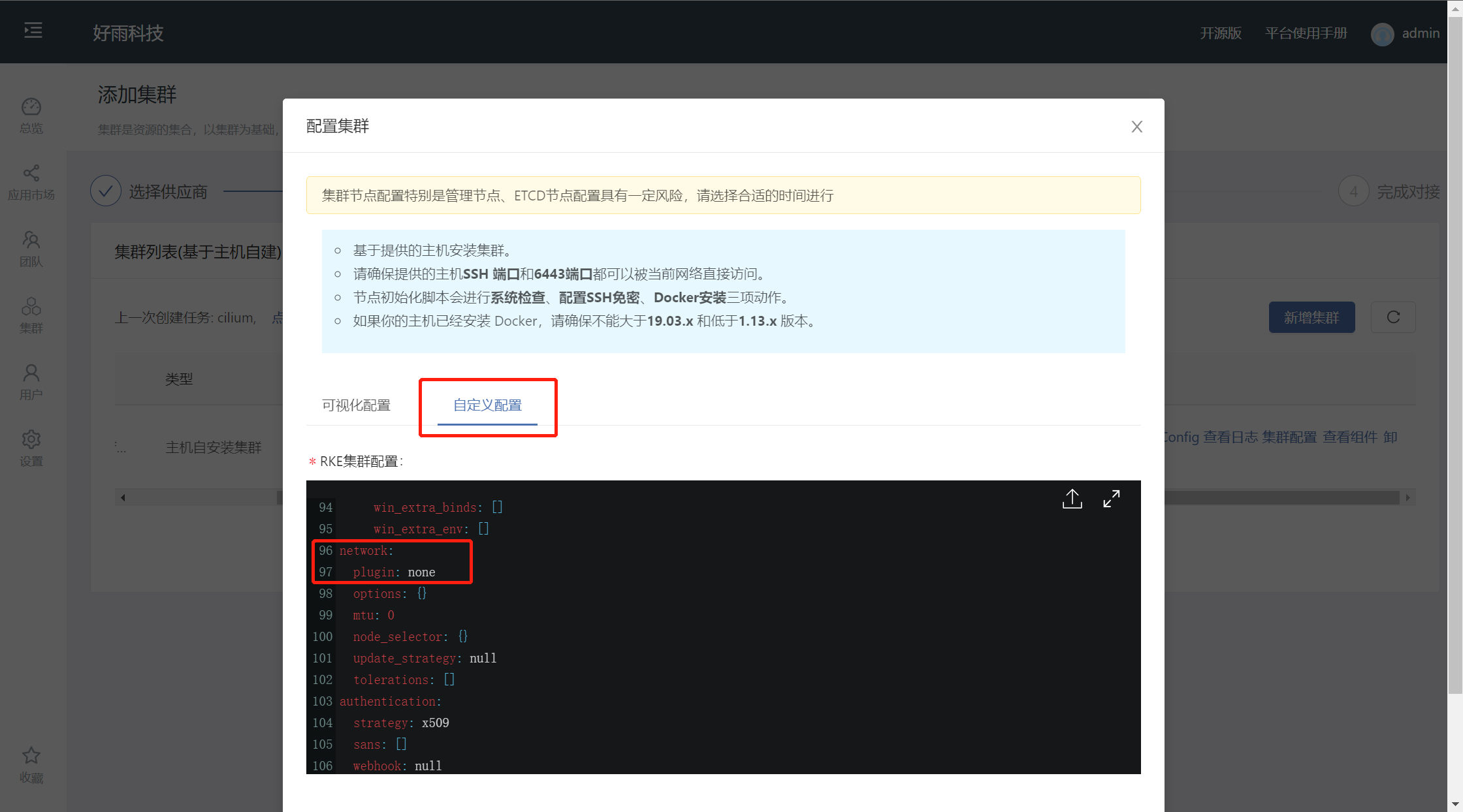

When using the installation from the host, modify the network.plugin value to none

-

install helm

wget https://goodrain-pkg.oss-cn-shanghai.aliyuncs.com/pkg/helm && chmod +x helm && mv helm /usr/local/bin/

- deploy cilium

helm repo add cilium https://helm.cilium.io/

helm install cilium cilium/cilium --version 1.11.2 --namespace kube-system --set operator.replicas=1

kubectl get pods --all-namespaces -o custom-columns=NAMESPACE:.metadata.namespace,NAME:.metadata.name,HOSTNETWORK:.spec.hostNetwork --no-headers=true | grep '<none>' | awk '{print "-n "$1" " $2}' | xargs -L 1 -r kubectl delete pod

- verify cilium

Download cilium command line tool

curl -L --remote-name-all https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz{,.sha256sum}

sha256sum --check cilium -linux-amd64.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-amd64.tar.gz /usr/local/bin

rm cilium-linux-amd64.tar.gz{,.sha256sum}

- confirm status

$ cilium status --wait

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

DaemonSet cilium Desired: 2, Ready: 2/2, Available: 2/2

Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

Containers: cilium-operator Running: 2

cilium Running: 2

Image versions cilium quay.io/cilium/cilium:v1.9.5: 2

cilium-operator quay.io/cilium/operator-generic:v1. 9.5:2

- Test network connectivity (when testing domestic servers, tests involving external networks may fail, which does not affect normal use)

$ cilium connectivity test

ℹ️ Monitor aggregation detected, will skip some flow validation steps

✨ [k8s-cluster] Creating namespace for connectivity check...

(...)

---------------- -------------------------------------------------- -------------------------------------------------- -

📋 Test Report

-------------------------------------------- -------------------------------------------------- -----------------------

✅ 69/69 tests successful (0 warnings)

Set up team network isolation

Cilium's network isolation policy follows the whitelist mechanism. Without creating a network policy, it does not impose any restrictions on the network. After a network policy is created for a set of pods of a specified type, all requests except the access addresses allowed in the policy will be blocked. reject.

-

Preliminary preparation

- Create two development teams and test teams with English names set to dev and test

- Create nginx-dev and nginx-test components under the development team and test team, open the internal port, and set the internal domain name to nginx-dev and nginx-test respectively

- Create client components under development and test teams

-

no restrictions

All services between teams can communicate freely without any special restrictions

-

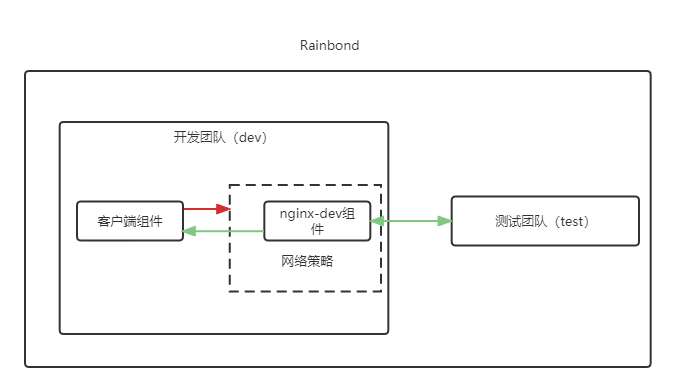

Restrictions only allow components within this team to access each other, and isolate other teams from accessing

In actual production, multiple teams such as development, testing, and production may be deployed in a cluster at the same time. Based on security considerations, it is necessary to isolate each team from the network and prohibit other teams from accessing it. The development team is used as an example to illustrate how to restrict access to it from other teams.

- Cilium network policy file (dev-ingress.yaml)

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "dev-namespace-ingress"

spec:

endpointSelector:

matchLabels:

"k8s:io.kubernetes.pod.namespace": dev

ingress:

- fromEndpoints:

- matchLabels:

"k8s:io.kubernetes.pod.namespace": dev

- Create a policy

kubectl create -f dev-ingress.yaml -n dev

- Confirmation Policy

$ kubectl get CiliumNetworkPolicy -A

NAMESPACE NAME AGE

dev dev-namespace-ingress 39s

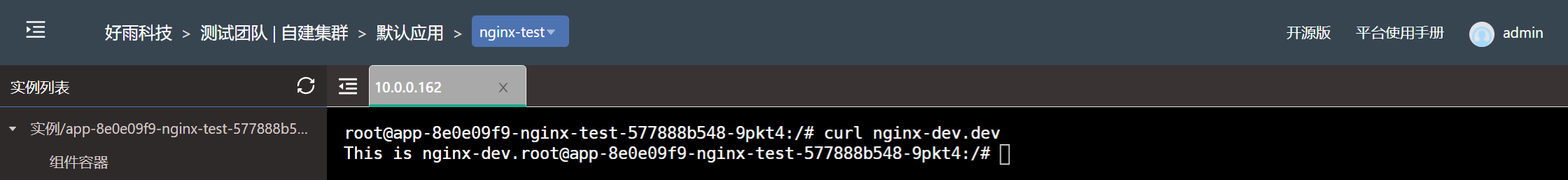

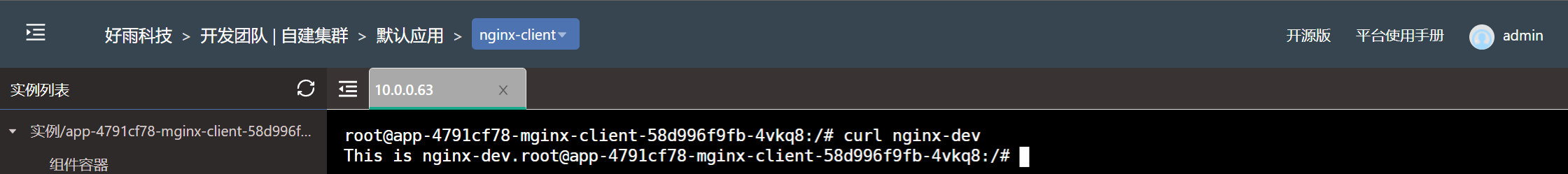

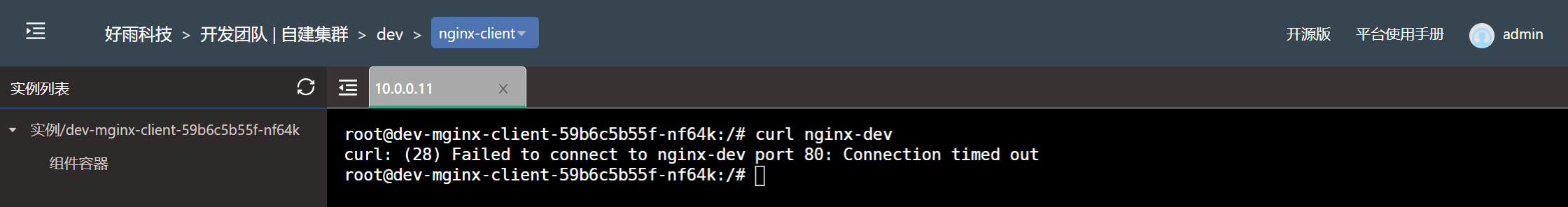

- Test effect

-

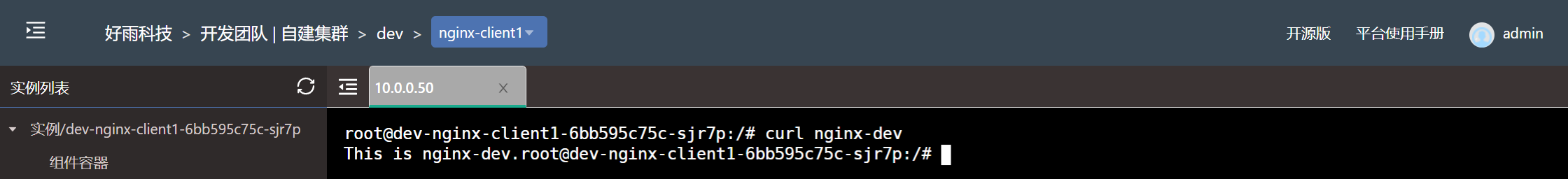

Set the nginx-dev component under the development team to only allow components under the test team to access

In some cases, the security requirements of some components will be more stringent, and only some components within the team that meet the requirements may be allowed to access. The following uses nginx-dev as an example to illustrate how to restrict access to only some components.

- Cilium network policy file (nginx-dev-ingress0.yaml)

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "nginx-dev-ingress"

spec:

endpointSelector:

matchLabels:

name: grc156cb

ingress:

- fromEndpoints:

- matchLabels:

name:

- Create a policy

kubectl create -f nginx-dev-ingress0.yaml -n dev

- Confirmation Policy

$ kubectl get CiliumNetworkPolicy -A

NAMESPACE NAME AGE

dev nginx-dev-ingress0 85s

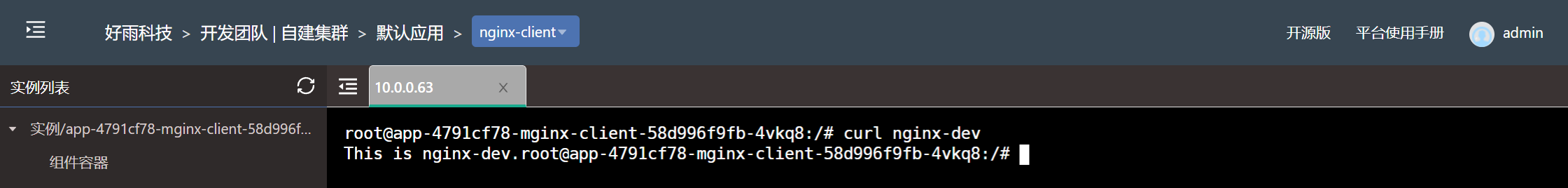

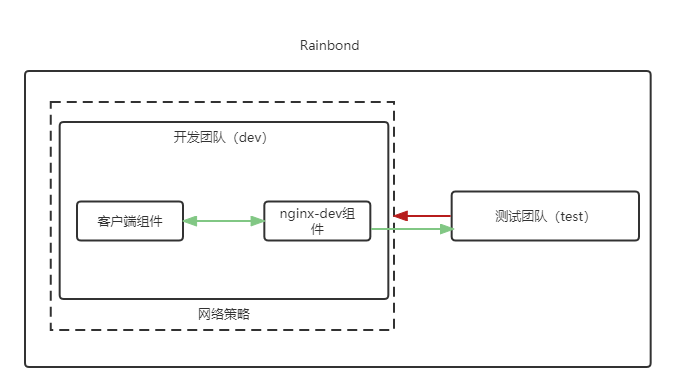

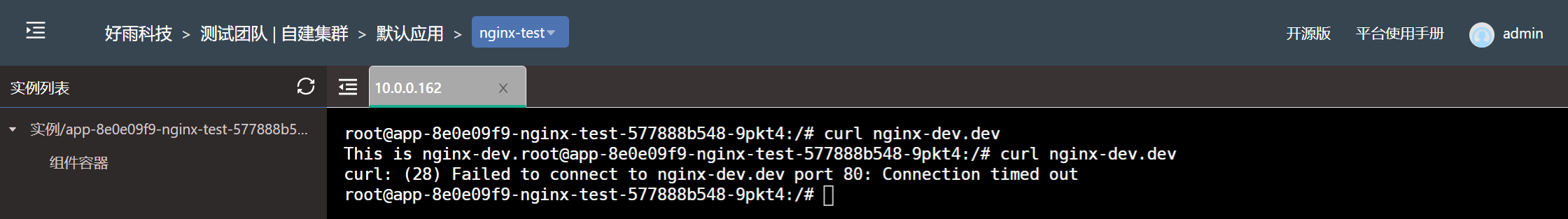

- Test effect

-

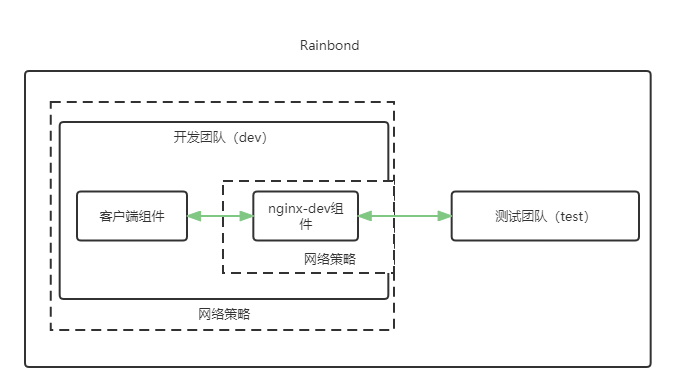

While setting the development team to allow the components under this team to access, allow the nginx-dev component under the development team to be accessed by any component in the test team

When the team network isolation is set, sometimes it is necessary to temporarily open some components for other teams to access for debugging. The following uses the nginx-dev component as an example to illustrate how to open the access rights of external teams when network isolation is set.

- Cilium network policy file (nginx-dev-ingress1.yaml)

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "nginx-dev-ingress1"

spec:

endpointSelector:

matchLabels:

name: grc156cb

ingress:

- fromEndpoints:

- matchLabels:

"k8s:io.kubernetes.pod.namespace": test

- Create a policy

kubectl create -f dev-ingress.yaml -n dev

kubectl create -f nginx-dev-ingress.yaml -n dev

- Confirmation Policy

$ kubectl get CiliumNetworkPolicy -A

NAMESPACE NAME AGE

dev dev-namespace-ingress 19s

dev nginx-dev-ingress1 12s

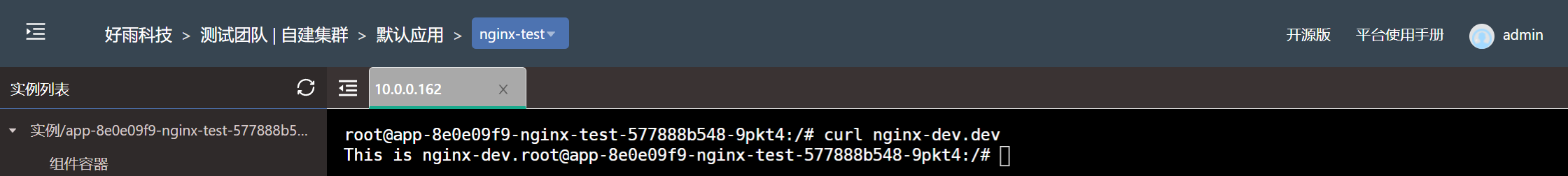

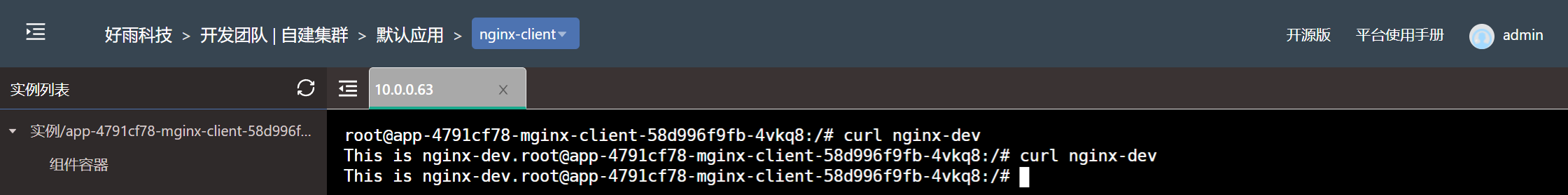

- Test effect